Some of these programming assignments are described in the course book.

In the 9th Edition (the paper version), kernel modules are described in "Programming Projects" in chapter 2 (pages 94-98) and in project 2 in "Programming Projects" in chapter 3 (pages 156-158).

In the 10th Edition (the electronic version), kernel modules are described in "Programming Projects" in chapter 2 (there are no page numbers) and in project 2 and 3 in "Programming Projects" in chapter 3.

The 10th Edition of the book, and the accompanying virtual machine, uses Linux kernel version 4.4. (The machines in T002 have this kernel.) The 9th Edition of the book, and its virtual machine, uses an older kernel version, 3.16.

Here is a graph showing its performance on a computer with an Intel Core i7-6850K CPU from 2016. It has 6 cores, with hyperthreading. As you can see, performance increases linearly with the number of threads up to 12 threads, but with 13 or more threads the increase stops. Question: Why is that?

(Click the graph for a larger version.)

Here is a graph showing the same program's performance on a Mac Mini from 2020, which has Apple's own M1 processor. It has 8 cores and no hyperthreading. Question: Can you explain these results, especially the "knee" on the curve at 4 threads? (Wikipedia has an article about the M1 processor: https://en.wikipedia.org/wiki/Apple_M1)

(Click the graph for a larger version.)

Question: The first computer above runs Linux, and the second (the Mac Mini) runs Mac OS X. Can you guess something, just from these two graphs, about how the schedulers in Linux and Mac OS X compare when it comes to handling large numbers of threads?

An added graph from June 28, 2021:

However, when I ran the same program on the same Mac Mini another time, I got the following graph. Questions: What do you think happened? What implications does it have for how you should run performance tests?

(Click the graph for a larger version.)

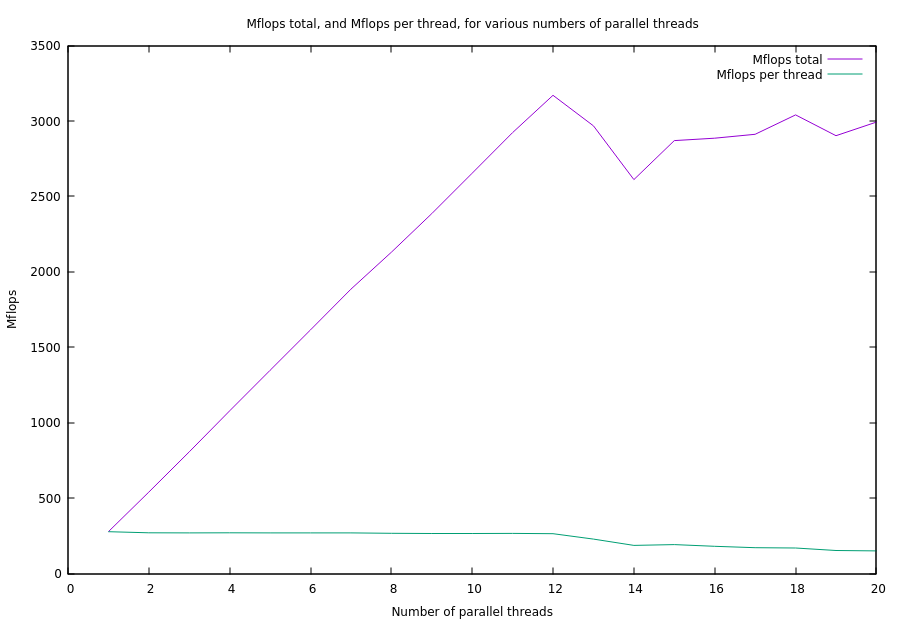

Here is a graph that shows the program's performance on the university's ThinLinc server (thinlinc.oru.se), which runs on an Intel Xeon CPU E5-2660 v3 from 2014. According to its specifications, this processor type has 10 cores, with hyperthreading. Question: Can you explain these results?

(Click it to big it!)

Run the same thread performance test program on your computer. Question: Which results do you get? Explain your results!

Try to utilize the hardware! For example, don't use a single lock, which locks the entire world. That would mean that no matter how many CPU cores you have, only one monster fight can happen at the same time.

Start without locking, and see if you get incorrect results.

See also the "MONSTER-WORLD-README" file.

Possibly useful command:

With the following Makefile you can just type "make multi-threaded-monster-world":gcc -Wall -Wextra -std=c11 -O3 multi-threaded-monster-world.c -lpthread -o multi-threaded-monster-world

CC = gcc CFLAGS += -Wall -Wextra -std=c11 -O3 LDLIBS += -lpthread

Questions:

|

If you are running this in a modern Linux (kernel version

4.4.0-20 or later) on a computer where Secure Boot is enabled, it will

fail with the error message "Operation not permitted", and in the

kernel messages (dmesg) it will print "insmod: Loading of unsigned

module is restricted; see man kernel_lockdown.7". This is beacause

kernel modules have to be signed. Either turn off Secure Boot (not

recommended), run in a virtual machine without Secure Boot, or sign

your module (after each recompilation). Here is a Stack Overflow

answer that explains how to sign your kernel modues:

(They are signing a module called vboxdrv, so you need to replace that with the name of your module.) |

There are macros to loop through processes, and they might be different between kernel 3 and 4.

An update: Note that in later kernels, the macro for_each_process has been moved to the #include file <linux/sched/signal.h>

Run the threaded program from task 1 or task 2 to verify that your module works.

Change the multi-threaded program so it actually is as fast as we hope! With five monsters, it should be (at least appoximately) five times faster, with ten monsters, ten times faster, and with twenty monsters, twenty times faster, assuming we run it on a computer that can handle that many threads in parallel.

This is not an easy task. You might need to significantly re-structure how the program works.